Note of: Informer: Beyond Efficient Transformer for Long Time-Series Forecasting

Note in abstract;

recent studies have shown the potential of transformer to increase the prediction capacity with is needed in Long sequence time series forecasting.

Features of Informer:

- a ProbSparse self-attention mechanism.

- the self-attention distilling highlights dominating attention by halving cascading layer input, and efficiently handles extreme long input sequences.

- the generative style decoder. conceptually simple, predicts long time series sequences at one forward operation rather than step-by-step way.

majority challenge for LTSF :

- extraordinary long range alignment ability

- efficient operations on long sequence inputs and outputs

Self-attention: it can reduce the maximum length of network signals traveling paths into theoretical shortest O(1) and avoids the recurrent structure.

Problem: L-quadratic computation and memory consumption 【老生常谈】

Aim: improve the computation memory and architecture efficient of Transformers.

Limitations of vanilla transformers:

- quadratic computation of self-attention

- memory bottleneck in stacking layers for long inputs.

- speed plunge in predicting long outputs

Contributions:

- propose Informer to successfully enhance the prediction capacity in LTSF problem, which validates Transformer-like model's potential value to capture individual long-range dependency between long sequence time-series' outputs and inputs.

- ProbSparse self-attention mechanism to efficiently replace the canonical selfattention and achieves O(LlogL) time complexity and O(LlogL) memory usage.

- Self-attention distilling operation privileges dominating attention scores in J-stacking layers and sharply reduce the total space complexity to be O((2-e)LlogL)

- Generative Style Decoder: acquire long sequence output with only one forward step needed, simultaneously avoiding cumulative error spreading during inference phase

Thinking:

Channel Attention in transformer. show the performance increase to 1%. with proves the effectiveness of the proposal approach. But how to formulate the motivations.

I think I should propose some fusion approach to deal with intermediate layer feature map. But how?

Ways: Search embedding feature fusion in google scholar.

Recall: ResNetSt's attention mechanism.

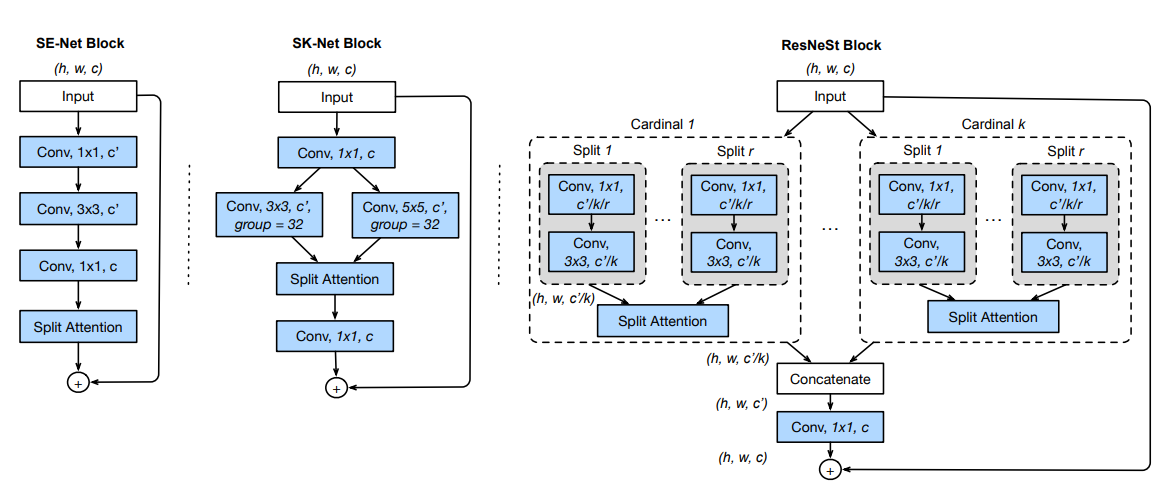

Figure 2: SE-Net Block:

illustration of figure2: comparing ResNetSt block with SE-Net, SK-Net. A detailed view of Split-Attention unit is shown in Figure 3. For simplicity, we show ResNeSt block in cardinality-major view ( the featuremap groups with same cardinal group index reside next to each other ). We use radix-major in the real implementation, which can be modularized and accelerated by group convolution and standard CNN layers

feature map groups: determined by hyperparameter cardinality K.

radix hyperparameter: R indicates the number of splits with a cardinal group.

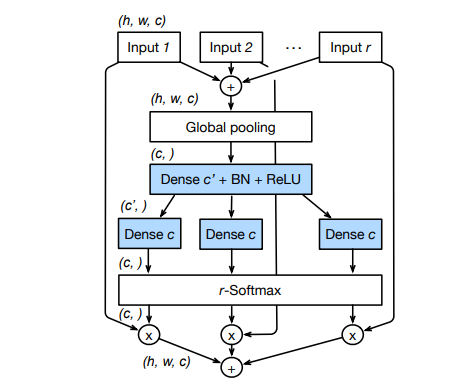

Figure 3:

Note: Split Attention Mechanism:

- Add multiple radix to get the total information.[Add multiple conv group's output in SK-Net, multiple channel in SE-Net]

- perform global pooling, dense, BN, on global pooling's output.

- calculate attention weight use separate dense layer.

- sum of the product of every attention weight with it's original input.

Add some tweaks on Split Attention mechanism?

Difference: Self-attention: calculate attention weight for each sequence use product of query and key vector.

Channel Attention: Self-attention: calculate

Split Attention: Use different Dense layer to calculate attention weight for each channel.

Reference with identification string

[1] Tran Son N, Nguyen Dung, Vu Tung-son Ngo Xuan-son, Hoang Long, Zhang Qing, Karunanithi Mohan. On multi-resident activity recognition in ambient smart-homes. Artificial Intelligence Review. (2020) 53:3929–3945 tran2020multi

[2] Aymen Shaafi, Osman Salem, Ahmed Mehaoua: Improving Human Activity Recognition Algorithms using Wireless Body Sensors and SVM. IWCMC 2020: 607-612 shaafi2020improving

[3] Bilal M'hamed Abidine, Belkacem Fergani, Anthony Fleury: Integrating Prior Knowledge in Weighted SVM for Human Activity Recognition in Smart Home. ICOST 2017: 233-239 fergani2017integrating

[4] Yixiao Yun, Keren Fu, Irene Yu-Hua Gu, Hamid K. Aghajan, Jie Yang: Human Activity Recognition in Images using SVMs and Geodesics on Smooth Manifolds. ICDSC 2014: 20:1-20:6 yun2014human

[5] Haitao Wu, Wei Pan, Xingyu Xiong, Suxia Xu: Human activity recognition based on the combined SVM&HMM. ICIA 2014: 219-224 wu2014human

[6] Mariana Abreu, Marília Barandas, Ricardo Leonardo, Hugo Gamboa: Detailed Human Activity Recognition based on Multiple HMM. BIOSIGNALS 2019: 171-178 abreu2019detailed

[7] Rubén San Segundo, Juan Manuel Montero, José Moreno-Pimentel, José M. Pardo: HMM Adaptation for Improving a Human Activity Recognition System. Algorithms 9(3): 60 (2016) san2016hmm

[8] Chuangui Yang, Zhu Wang, Botao Wang, Shizhuo Deng, Guangxin Liu, Yuru Kang, Huichao Men: CHAR-HMM: An Improved Continuous Human Activity Recognition Algorithm Based on Hidden Markov Model. MSN 2017: 271-282 yang2017char

[9] Benmansour A, Bouchachia A, Feham M (2015) Multioccupant activity recognition in pervasive smart home environments. ACM Comput Surv 48(3): 34:1–34:36. https://doi.org/10.1145/2835372 benmansour2015multioccupant

[10] Son N. Tran · Tung-Son Ngo · Qing Zhang · Mohan Karunanithi. Mixed-dependency models for multi-resident activity recognition in smart homes. Multimedia Tools and Applications (2020) 79:23445–23460 https://doi.org/10.1007/s11042-020-09093-0 tran2020mixed

[11] Lei Bai, Lina Yao, Xianzhi Wang, Salil S. Kanhere, Bin Guo, and Zhiwen Yu. 2020. Adversarial Multi-view Networks for Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 2, Article 42 (June 2020), 22 pages. https: //doi.org/10.1145/3397323 bai2020adversarial

[12] Yiqiang Chen, Jindong Wang, Meiyu Huang, and Han Yu. 2019. Cross-position activity recognition with stratified transfer learning. Pervasive and Mobile Computing (2019). chen2019cross

[13] Yiqiang Chen, Jindong Wang, Chaohui Yu, Wen Gao, and Xin Qin. 2019. FedHealth: A Federated Transfer Learning Framework for Wearable Healthcare. arXiv preprint arXiv:1907.09173 (2019). chen2020fedhealth

[14] C. Tran and M. M. Trivedi, “3-d posture and gesture recognition for interactivity in smart spaces,” IEEE Trans. Ind. Informat., vol. 8, no. 1, pp. 178–187, Feb. 2012. tran20113

[15] D. Tao, L. Jin, Y. Wang, and X. Li, “Rank preserving discriminant analysis for human behavior recognition onwireless sensor networks,” IEEETrans. Ind. Informat., vol. 10, no. 1, pp. 813–823, Feb. 2014. tao2013rank

[16] Zhenghua Chen, Qingchang Zhu, Yeng Chai Soh, and Le Zhang. Robust Human Activity Recognition Using Smartphone Sensors via CT-PCA and Online SVM. IEEE TRANSACTIONS ON INDUSTRIAL INFORMATICS, 2017: 3070-3080. chen2017robust

[17] Qingjuan Li, Wei Huangfu, Fadi Farha, Tao Zhu, Shunkun Yang, Liming Chen, Huansheng Ning. Multi-resident type recognition based on ambient sensors activity. Future Generation Computer Systems 112 (2020) 108–115. li2020multi

[18] Lee, S., Dongil Shin, Dongkyoo Shin. Sensor-based Abnormal Behavior Detection Using Autoencoder. 2019: 111–117. lee2019sensor

[19] Thanos G. Stavropoulos, Georgios Meditskos, Stelios Andreadis, Konstantinos Avgerinakis, Katerina Adam, Ioannis Kompatsiaris:

Semantic event fusion of computer vision and ambient sensor data for activity recognition to support dementia care. J. Ambient Intell. Humaniz. Comput. 11(8): 3057-3072 (2020). stavropoulos2016semantic

[20] Gadelhag Mohmed, Ahmad Lotfi, Amir Pourabdollah: Employing a deep convolutional neural network for human activity recognition based on binary ambient sensor data. PETRA 2020: 56:1-56:7 mohmed2020employing

[21] Minh-Tien Nguyen, Dung Tien Le, Linh Le, Transformers-based information extraction with limited data for domain-specific business documents, Engineering Applications of Artificial Intelligence,2021. nguyen2021transformers

[22] Gaurav Jain, Shivang Chopra, Suransh Chopra, Anil Singh Parihar. Trans SketchNet: Attention-Based Sketch Recognition Using Transformers. ECAI (2020):2907-2908. jaintranssketchnet

[23] Wenhui Wang, Furu Wei, Li Dong, Hangbo Bao, Nan Yang, Ming Zhou: MiniLM: Deep Self-Attention Distillation for Task-Agnostic Compression of Pre-Trained Transformers. NeurIPS 2020 wang2020minilm

[24] Xiao Jin, Jianfei Yu, Zixiang Ding, Rui Xia, Xiangsheng Zhou, Yaofeng Tu: Hierarchical Multimodal Transformer with Localness and Speaker Aware Attention for Emotion Recognition in Conversations. NLPCC (2) 2020: 41-53 jin2020hierarchical

[25] Haoran Miao, Gaofeng Cheng, Changfeng Gao, Pengyuan Zhang, Yonghong Yan: Transformer-based Online CTC/attention End-to-End Speech Recognition Architecture. CoRR abs/2001.08290 (2020) miao2020transformer

[26] Apoorv Vyas, Angelos Katharopoulos, François Fleuret: Fast Transformers with Clustered Attention. NeurIPS 2020 vyas2020fast

[27] Goro Kobayashi, Tatsuki Kuribayashi, Sho Yokoi, Kentaro Inui: Attention Module is Not Only a Weight: Analyzing Transformers with Vector Norms. CoRR abs/2004.10102 (2020) kobayashi2020attention

[28] Yaru Hao, Li Dong, Furu Wei, Ke Xu: Self-Attention Attribution: Interpreting Information Interactions Inside Transformer. CoRR abs/2004.11207 (2020) hao2020self

[29] Xinxin Han, Jian Ye, Jia Luo, Haiying Zhou: The Effect of Axis-Wise Triaxial Acceleration Data Fusion in CNN-Based Human Activity Recognition. IEICE Trans. Inf. Syst. 103-D(4): 813-824 (2020) han2020effect

[30] Jiahui Huang, Shuisheng Lin, Ning Wang, Guanghai Dai, Yuxiang Xie, Jun Zhou: TSE-CNN: A Two-Stage End-to-End CNN for Human Activity Recognition. IEEE J. Biomed. Health Informatics 24(1): 292-299 (2020) huang2019tse

[31] Kei Yaguchi, Kazukiyo Ikarigawa, Ryo Kawasaki, Wataru Miyazaki, Yuki Morikawa, Chihiro Ito, Masaki Shuzo, Eisaku Maeda: Human activity recognition using multi-input CNN model with FFT spectrograms. UbiComp/ISWC Adjunct 2020: 364-367 yaguchi2020human

[32] Lukun Wang, Ruyue Liu: Human Activity Recognition Based on Wearable Sensor Using Hierarchical Deep LSTM Networks. Circuits Syst. Signal Process. 39(2): 837-856 (2020) wang2020human

[33] Onur Barut, Li Zhou, Yan Luo: Multitask LSTM Model for Human Activity Recognition and Intensity Estimation Using Wearable Sensor Data. IEEE Internet Things J. 7(9): 8760-8768 (2020) barut2020multitask

[34] Luay Alawneh, Belal Mohsen, Mohammad Al-Zinati, Ahmed Shatnawi, Mahmoud Al-Ayyoub: A Comparison of Unidirectional and Bidirectional LSTM Networks for Human Activity Recognition. PerCom Workshops 2020: 1-6 alawneh2020comparison

[35] Schalk Wilhelm Pienaar, Reza Malekian: Human Activity Recognition Using LSTM-RNN Deep Neural Network Architecture. CoRR abs/1905.00599 (2019) pienaar2019human

[36] Praneeth Susarla, Utkarsh Agrawal, Dinesh Babu Jayagopi: Human Weapon-Activity Recognition in Surveillance Videos Using Structural-RNN. MedPRAI 2018: 101-107 susarla2018human

[37] J. Yang, M. N. Nguyen, P. P. San, X. L. Li, and S. Krishnaswamy, “Deep convolutional neural networks on multichannel time series for human activity recognition,” in Twenty-Fourth International Joint Conference on Artificial Intelligence, 2015. yang2015deep

[38] A. Baldominos, Y. Saez, and P. Isasi, “Evolutionary design of convolutional neural networks for human activity recognition in sensor-rich environments,” Sensors, vol. 18, no. 4, p. 1288, 2018. baldominos2018evolutionary

[39] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin: Attention is All you Need. NIPS 2017: 5998-6008 vaswani2017attention

[40] G. Singla, D. J. Cook, and M. Schmitter-Edgecombe, “Recognizing independent and joint activities among multiple residents in smart environments,” Journal of ambient intelligence and humanized computing, vol. 1, no. 1, pp. 57–63, 2010. singla2010recognizing

[41] Cao J, Guo F, Lai X, et al. A Tree-Structure Convolutional Neural Network for Temporal Features Exaction on Sensor-Based Multi-resident Activity Recognition[C]//International Conference on Neural Computing for Advanced Applications. Springer, Singapore, 2020: 513-525. cao2020tree

[42] Kim E. Interpretable and accurate convolutional neural networks for human activity recognition[J]. IEEE Transactions on Industrial Informatics, 2020, 16(11): 7190-7198. kim2020interpretable